More and more people are doing video conferencing everyday, and for that to be possible, the video has to be encoded before being sent over the network. The most efficient and most popular codec at this time is H264, and since the UVC (USB Video Class) specification 1.1, there is support for H264 encoding cameras.

One such camera is the Logitech C920. A really great camera which can produce a 1080p H264-encoded stream at 30 fps. As part of my job for Collabora, I was tasked to add support for such a camera in GStreamer. After months of work, it’s finally done and has now been integrated upstream into gst-plugins-bad 0.10 (port to GST 1.0 pending).

One important aspect here is that when you are capturing a high quality, high resolution H264 stream, you don’t want to be wasting your CPU to decode your own video in order to show a preview window during a video chat, so it was important for me to be able to capture both H264 and raw data from the camera. For this reason, I have decided to create a camerabin2-style source: uvch264_src.

Uvch264_src is a source which implements the GstBaseCameraSrc API. This means that instead of the traditional ‘src’ pad, it provides instead three distinct source pads: vidsrc, imgsrc and vfsrc. The GstBaseCameraSrc API is based heavily on the concept of a “Camera” application for phones. As such, the vidsrc is meant as a source for recording video, imgsrc as a source for taking a single-picture and vfsrc as a source for the viewfinder (preview) window. A ‘mode’ property is used to switch between video-mode and image-mode for capture. The uvch264_src source only supports video mode however, and the imgsrc will never be used.

When the element goes to PLAYING, only the vfsrc will output data, and you have to send the “start-capture” action signal to activate the vidsrc/imgsrc pads, and send the “stop-capture” action signal to stop capturing from the vidsrc/imgsrc pads. Note that vfsrc will be outputting data when in PLAYING, so it must always be linked (link it to fakesink if you don’t need the preview, otherwise you’ll get a not-linked error). If you want to test this on the command line (gst-launch) you can set the ‘auto-start’ property to TRUE and the uvch264_src will automatically start the capture after going to PLAYING.

You can request H264, MJPG, and raw data from the vidsrc, but only MJPG and raw data from the vfsrc. When requesting H264 from the vidsrc, then the max resolution for the vfsrc will be 640×480, which can be served as jpg or as raw (decoded from jpg). So if you don’t want to use any CPU for decoding, you should ask for a raw resolution lower than 432×240 (with the C920) which will directly capture YUV from the camera. Any higher resolution won’t be able to go through the usb’s bandwidth and the preview will have to be captured in mjpg (uvch264_src will take care of that for you).

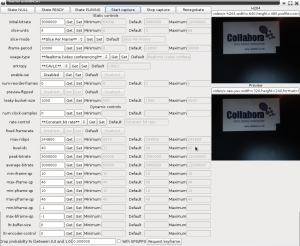

The source has two types of controls, the static controls which must be set in READY state, and DYNAMIC controls which can be dynamically changed in PLAYING state. The description of each property will specify whether that property is a static or dynamic control, as well as its flags. Here are the supported static controls : initial-bitrate, slice-units, slice-mode, iframe-period, usage-type, entropy, enable-sei, num-reorder-frames, preview-flipped and leaky-bucket-size. The dynamic controls are : rate-control, fixed-framerate, level-idc, peak-bitrate, average-bitrate, min-iframe-qp, max-iframe-qp, min-pframe-qp, max-pframe-qp, min-bframe-qp, max-bframe-qp, ltr-buffer-size and ltr-encoder-control.

Each control will have a minimum, maximum and default value, and those are specific to each camera and need to be probed when the element is in READY state. For that reason, I have added three element actions to the source in order to probe those settings : get-enum-setting, get-boolean-setting and get-int-setting.

These functions will return TRUE if the property is valid and the information was successfully retrieved, or FALSE if the property is invalid (giving an

invalid name or a boolean property to get_int_setting for example) or if the camera returned an error trying to probe its capabilities.

The prototype of the signals are :

- gboolean get_enum_setting (GstElement *object, char *property, gint *mask, gint *default);

Where the mask is a bit field specifying which enums can be set, where the bit being set is (1 << enum_value).

For example, the ‘slice-mode’ enum can only be ignored (0) or slices/frame (3), so the mask returned would be : 0x09

That is equivalent to (1 << 0 | 1 << 3) which is :

(1 << UVC_H264_SLICEMODE_IGNORED) | (1 << UVC_H264_SLICEMODE_SLICEPERFRAME) - gboolean get_int_setting (GstElement *object, char *property, gint *min, gint *def, gint *max);

This one gives the minimum, maximum and default values for a property. If a property has min and max to the same value, then the property cannot be changed, for example the C920 has num-reorder-frames setting: min=0, def=0 and max=0, and it means the C920 doesn’t support reorder frames. - gboolean get_boolean_setting (GstElement *object, char *property, gboolean *changeable, gboolean *default_value);

The boolean value will have changeable=TRUE only if changing the property will have an effect on the encoder, for example, the C920 does not support the preview-flipped property, so that one would have changeable=FALSE (and default value is FALSE in this case), but it does support the enable-sei property so that one would have changeable=TRUE (with a default value of FALSE).

This should give you all the information you need to know which settings are available on the hardware, and from there, be able to control the properties

that are available.

Since these are element actions, they are called this way :

gboolean return_value, changeable, default_bool; gint mask, minimum, maximum, default_int, default_enum; g_signal_emit_by_name (G_OBJECT(element), "get-enum-setting", "slice-mode", &mask, &default_enum, &return_value, NULL); g_signal_emit_by_name (G_OBJECT(element), "get-boolean-setting", "enable-sei", &changeable, &default_bool, &return_value, NULL); g_signal_emit_by_name (G_OBJECT(element), "get-int-setting", "iframe-period", &minimum, &default_int &maximum, &return_value, NULL);

Apart from that, the source also supports the GstForceKeyUnit video event for dynamically requesting keyframes, as well as custom-upstream events to control LTR (Long-Term Reference frames), bitrate, QP, rate-control and level-idc, through, respectively, the uvc-h264-ltr-picture-control, uvc-h264-bitrate-control, uvc-h264-qp-control, uvc-h264-rate-control and uvc-h264-level-idc custom upstream events (read the code for more information!). The source also supports receiving the ‘renegotiate’ custom upstream event which will make it renegotiate the according to the caps on its pads. This is useful if you want to enable/disable h264 streaming or switch resolution/framerate easily while the pipeline is running; Simply change your caps and send the renegotiate event.

I have written a GUI test application which you can use to test the camera and the source’s various features. It can also serve as a reference implementation on how the source can be used. The test application resides in gst-plugins-bad, under tests/examples/uvch264/ (make sure to run it from its source directory though).

You can also use this example gst-launch pipeline for testing the capture of the camera. This will capture a small preview window as well as an h264 stream in 1080p that will be decoded locally :

gst-launch uvch264_src device=/dev/video1 name=src auto-start=true src.vfsrc ! queue ! “video/x-raw-yuv,width=320,height=240,framerate=30/1” ! xvimagesink src.vidsrc ! queue ! video/x-h264,width=1920,height=1080,framerate=30/1,profile=constrained-baseline ! h264parse ! ffdec_h264 ! xvimagesink

That’s about it. If you are interested in using uvch264_src to capture from one of the UVC H264 encoding cameras, make sure you upgrade to the latest git versions of gstreamer, gst-plugins-base, gst-plugins-good and gst-plugins-bad (or 0.10.37+ for gstreamer/gst-plugins-base, 0.10.32 for gst-plugins-good and 0.10.24 for gst-plugins-bad, whenever those versions get released).

I would like to thank Collabora and Cisco for sponsoring me to work on this great project, it couldn’t have been possible without them!

If you have any more questions about this subject, feel free to contact me.

Enjoy!